|

| ||||||||

|

|

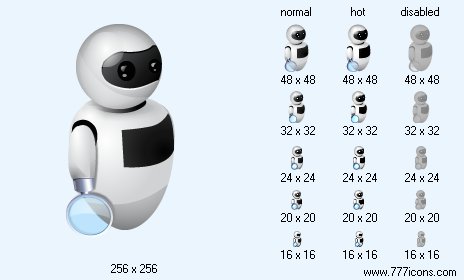

Finder Icon |

|

Image sizes: 256x256, 48x48, 32x32, 24x24, 20x20, 16x16

File formats: BMP, GIF, PNG, ICO

Automatic analysis of texts

It turns out that all man-made texts are constructed by the same rules! No one can bypass them. Whatever language is used, no matter who wrote - classic or graphomaniac - the internal structure of the text remains unchanged. It is described by the laws of GK Zipf. He suggested that the natural human laziness (though this property of any living creature) leads to the fact that words with more letters in the text rarely short of words. Based on this postulate, Zipf brought two universal laws: The first law of Zipf's "rank - frequency"Choose any word and count the number of times it occurs in the text. This quantity is called the frequency of occurrence of the word. Measure the frequency of each word of text. Some words will have the same frequency, that is included in the text of an equal number of times. Group the them, taking only one value from each group. Arrange the frequency as they decay and numbered. Serial number of frequencies is called the rank of frequency. Thus, the most common words will have a rank of 1, following them - 2, etc. Letís pick random options and determine the probability to meet the floor, on which was chosen. Probability is equal to the frequency of occurrence of the word to the total number of words in the text.

Probability = Frequency of occurrence of words / number of words

Zipf found an interesting pattern. It turns out that if you multiply the probability of finding words in the text to the rank of frequency, the resulting value (P) is approximately constant!

C = (frequency of occurrence of the word x Rang frequency) / Number of words

If we transform the formula a bit, and then take a look in the handbook on mathematics, we see that this function of the type y = k / x and its graph - equilateral hyperbole. Consequently, according to Zipf's first law, if the most common word occurs in the text, for example, 100 times, then the next frequency word is unlikely to meet 99 times. The frequency of occurrence of the second most popular words, with high probability, will be at 50. (Of course, you should understand that the statistics do not entirely accurate: 50, 52 - not so important.)

The constant in different languages is different, but within a single language group remains unchanged, whatever text we take. For example, for English texts Zipf constant is approximately equal to 0,1. I wonder how it looks from the perspective of the laws of Zipf's Russian texts? They are not an exception. Analysis is stored in my computer files with Russian texts convinced that the law is perfect and there. For the Russian language Zipf coefficient given equal 0,06-0,07. Although these studies do not claim to comprehensiveness, universality of Zipf's law suggests that the data obtained is quite reliable.

Copyright © 2006-2022 Aha-Soft. All rights reserved.

|